He's waving back at you!

project context

I designed a "Friendly" AI model.

I was a research student in a group focused on learning more about machine learning. In this class, we worked on a final project model where we presented an visual AI model that we worked on for the last couple of weeks. My goal for this assignment was to conceptualize what AI could look like as a physical entity.

TIMELINE

March 2021 - June 2021

TEAM

Directed Research Group

TOOLS

Procreate, iPad, Raspberry Pi, Terminal, Lobe

DELIVERABLES

Visual Machine Learning Model

directed research group (Drg) context

I learned more about visual AI and ML models through research.

Every week, I sat in on a two-hour discussion to learn more about machine learning through papers, articles, and practice. I worked on projects using Lobe, a visual machine learning program that allows you to upload any images or videos and add respective labels to them. Then I would connect the model to a program using a raspberry pi, and see how it plays out. I followed a handful of tutorials using https://learn.adafruit.com

how it works

I used Lobe to create a visual ML model.

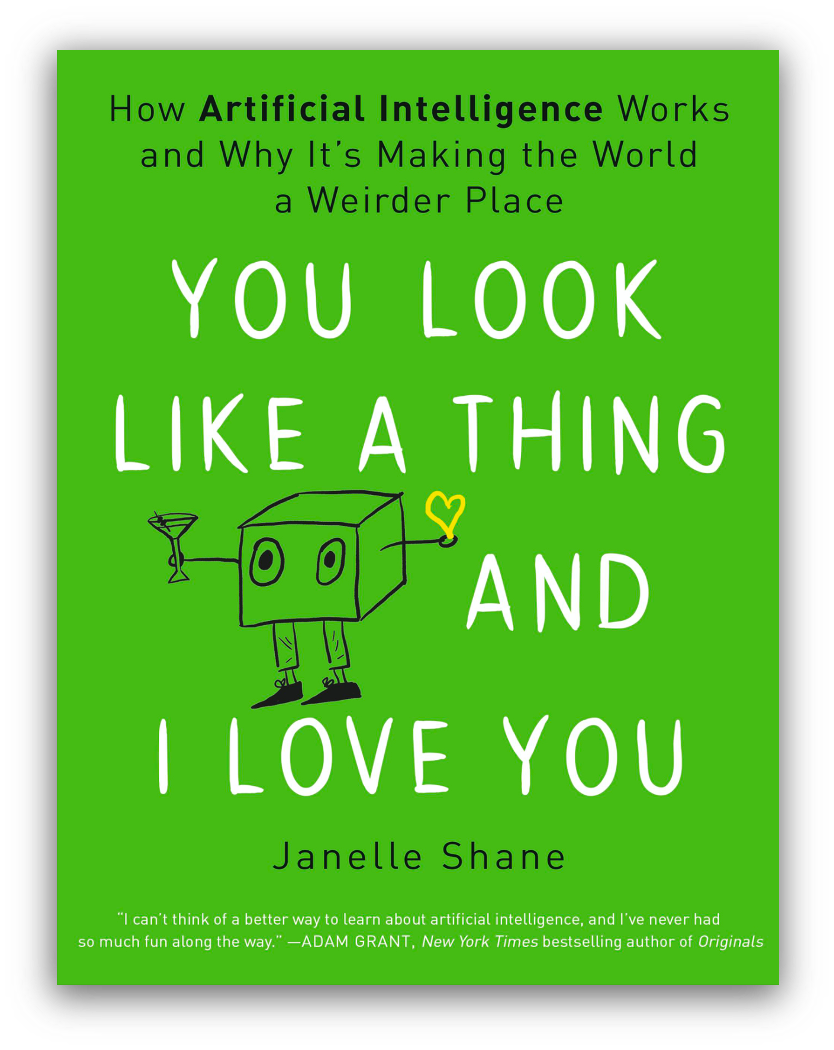

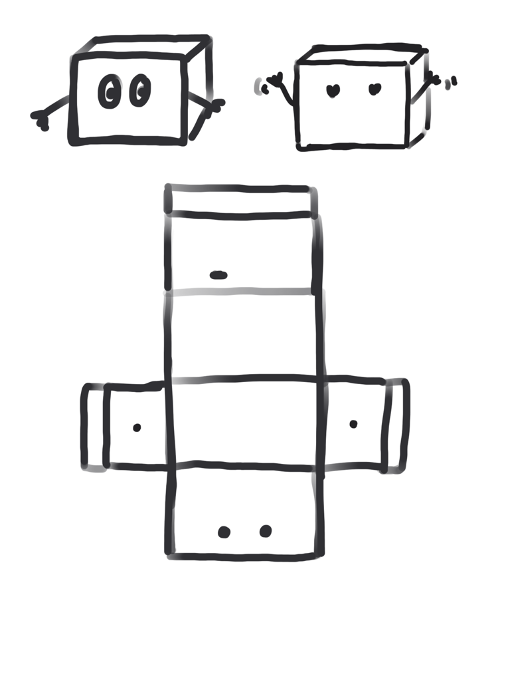

I came up with a simple visual ML model that reads specific gestures and responds depending on what you do. I wanted to give a more physical entity to the AI, and recreated a character from one of our class readings: You Look Like a Thing and I Love You.

context

What is Machine learning (ML)?

An algorithm is essentially following a set of instructions or steps. We've seen algorithms in our everyday life like cooking a recipe, solving a math equation, or finding your way home. Machine learning (ML) adds an even more complicated layer to algorithms: we can give data to algorithms to learn and have more confidence to solve even more complicated problems. You've probably seen these algorithms hard at work on social media, like getting an ad on Instagram for a product that you briefly mentioned talking about with a friend (and probably thought: "my phone is listening to me").

Physical model

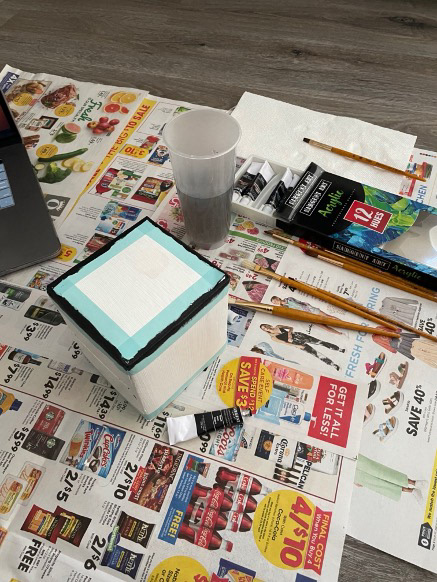

I used at-home resources to create my physical prototype.

Because this was a COVID-era project, I was very limited in the resources available to me at the time. I reused an empty tissue box to store my raspberry pi, and also painted over it with acrylics to recreate the character.

Visual assets

I created visuals to add feedback to my prototype.

The raspberry pi came with a camera that allowed the ML model give some sort of response, so depending on the four gestures, the model would reply to the user. I decided to create the following gestures and responses:

1) Wave -- "Hello"

2) Present a flower -- "Oo, pretty!"

3) Listen -- "You look like a thing and I love you!"

4) Person -- "Hey there, human."

results

Greeting a human.

When the model sees a human, it will greet them the appropriate image.

results

Presenting a flower.

When the ML model sees a flower, it's in awe!

results

Waving back.

When the ML Model sees a hand wave at it, it'll wave back! (refer to the first video at the top of the page to see it wave)

results

Giving a compliment.

When the ML model sees the user raise their hand to their ear, it'll compliment them.

takeaways

I learned how much value machine learning brings to UX.

Before taking this class, I used to think that developing and training an AI model was simple-- To just go and feed it a set of images and wait for it to develop. But it's so much more than that. Images take a lot of time to curate, and even the changes in environment and lighting make such a huge difference. Creating different gestures and capturing as much diverse data as possible (different users, different skin tones, etc.) help your model become more accurate in responding to the appropriate labels.